- All Exams Instant Download

How should you configure the Data Factory copy activity?

HOTSPOT

You use Azure Data Factory to prepare data to be queried by Azure Synapse Analytics serverless SQL pools.

Files are initially ingested into an Azure Data Lake Storage Gen2 account as 10 small JSON files. Each file contains the same data attributes and data from a subsidiary of your company.

You need to move the files to a different folder and transform the data to meet the following requirements:

✑ Provide the fastest possible query times.

✑ Automatically infer the schema from the underlying files.

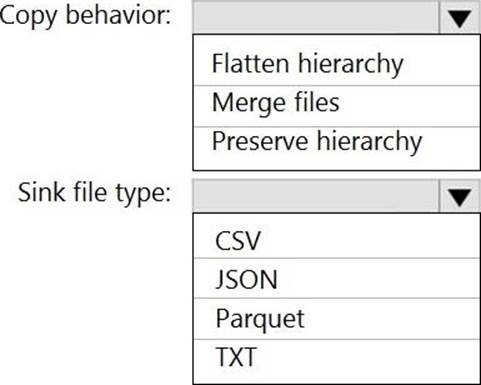

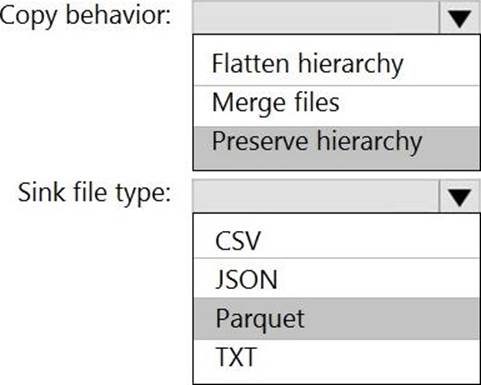

How should you configure the Data Factory copy activity? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Answer:

Explanation:

Box 1: Preserver herarchy

Compared to the flat namespace on Blob storage, the hierarchical namespace greatly improves the performance of directory management operations, which improves overall job performance.

Box 2: Parquet

Azure Data Factory parquet format is supported for Azure Data Lake Storage Gen2.

Parquet supports the schema property.

Latest DP-203 Dumps Valid Version with 116 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund

Subscribe

Login

0 Comments

Inline Feedbacks

View all comments