- All Exams Instant Download

Which combination of steps should the Data Scientist take to reduce the number of false positive predictions by the model?

A Data Scientist is developing a machine learning model to classify whether a financial transaction is fraudulent. The labeled data available for training consists of 100,000 non-fraudulent observations and 1,000 fraudulent observations.

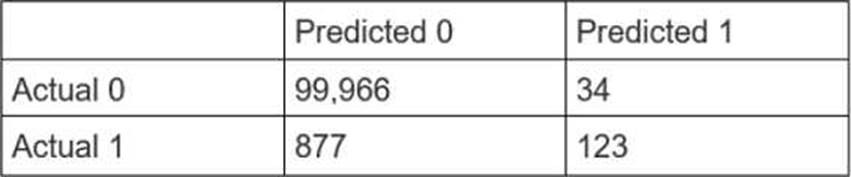

The Data Scientist applies the XGBoost algorithm to the data, resulting in the following confusion matrix when the trained model is applied to a previously unseen validation dataset.

The accuracy of the model is 99.1%, but the Data Scientist has been asked to reduce the number of false negatives.

Which combination of steps should the Data Scientist take to reduce the number of false positive predictions by the model? (Select TWO.)

A . Change the XGBoost eval_metric parameter to optimize based on rmse instead of error.

B . Increase the XGBoost scale_pos_weight parameter to adjust the balance of positive and negative weights.

C . Increase the XGBoost max_depth parameter because the model is currently underfitting the data.

D . Change the XGBoost evaljnetric parameter to optimize based on AUC instead of error.

E . Decrease the XGBoost max_depth parameter because the model is currently overfitting the data.

Answer: B, D

Explanation:

The XGBoost algorithm is a popular machine learning technique for classification problems. It is based on the idea of boosting, which is to combine many weak learners (decision trees) into a strong learner (ensemble model).

The XGBoost algorithm can handle imbalanced data by using the scale_pos_weight parameter, which controls the balance of positive and negative weights in the objective function. A typical value to consider is the ratio of negative cases to positive cases in the data. By increasing this parameter, the algorithm will pay more attention to the minority class (positive) and reduce the number of false negatives.

The XGBoost algorithm can also use different evaluation metrics to optimize the model performance. The default metric is error, which is the misclassification rate. However, this metric can be misleading for imbalanced data, as it does not account for the different costs of false positives and false negatives. A better metric to use is AUC, which is the area under the receiver operating characteristic (ROC) curve. The ROC curve plots the true positive rate against the false positive rate for different threshold values. The AUC measures how well the model can distinguish between the two classes, regardless of the threshold. By changing the eval_metric parameter to AUC, the algorithm will try to maximize the AUC score and reduce the number of false negatives.

Therefore, the combination of steps that should be taken to reduce the number of false negatives are to increase the scale_pos_weight parameter and change the eval_metric parameter to AUC.

Reference: XGBoost Parameters

XGBoost for Imbalanced Classification

Latest MLS-C01 Dumps Valid Version with 104 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund

Subscribe

Login

0 Comments

Inline Feedbacks

View all comments