What is the most likely cause?

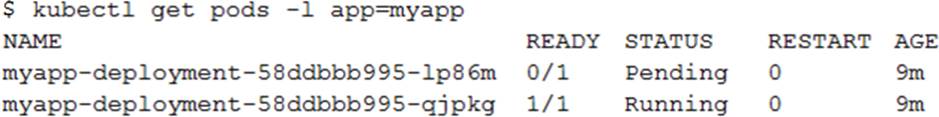

You create a Deployment with 2 replicas in a Google Kubernetes Engine cluster that has a single preemptible node pool. After a few minutes, you use kubectl to examine the status of your Pod and observe that one of them is still in Pending status:

What is the most likely cause?

A . The pending Pod’s resource requests are too large to fit on a single node of the cluster.

B . Too many Pods are already running in the cluster, and there are not enough resources left to schedule the pending Pod.

C . The node pool is configured with a service account that does not have permission to pull the container image used by the pending Pod.

D . The pending Pod was originally scheduled on a node that has been preempted between the creation of the Deployment and your verification of the Pods’ status. It is currently being rescheduled on a new node.

Answer: B

Explanation:

The pending Pods resource requests are too large to fit on a single node of the cluster. Too many Pods are already running in the cluster, and there are not enough resources left to schedule the pending Pod. is the right answer.

When you have a deployment with some pods in running and other pods in the pending state, more often than not it is a problem with resources on the nodes. Heres a sample output of this use case. We see that the problem is with insufficient CPU on the Kubernetes nodes so we have to either enable auto-scaling or manually scale up the nodes.

Latest Associate Cloud Engineer Dumps Valid Version with 181 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund