Question Set 1

HOTSPOT

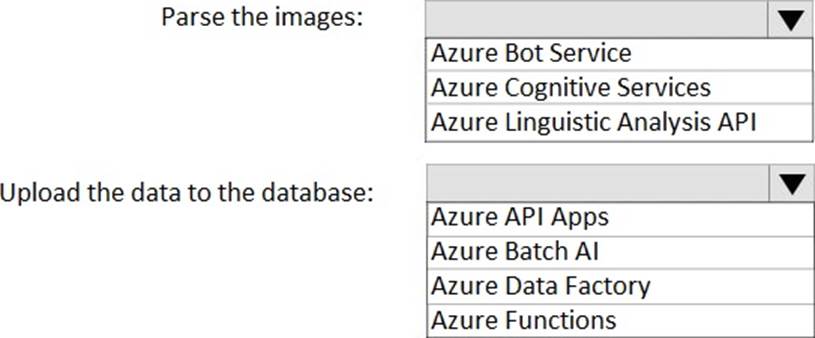

You are designing an application to parse images of business forms and upload the data to a database. The upload process will occur once a week. You need to recommend which services to use for the application. The solution must minimize infrastructure costs.

Which services should you recommend? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Azure Cognitive Services

Azure Cognitive Services include image-processing algorithms to smartly identify, caption, index, and moderate your pictures and videos.

Not: Azure Linguistic Analytics API, which provides advanced natural language processing over raw text.

Box 2: Azure Data Factory

The Azure Data Factory (ADF) is a service designed to allow developers to integrate disparate data sources. It is a platform somewhat like SSIS in the cloud to manage the data you have both on-prem and in the cloud.

It provides access to on-premises data in SQL Server and cloud data in Azure Storage (Blob and Tables) and Azure SQL Database.

References: https://azure.microsoft.com/en-us/services/cognitive-services/

https://www.jamesserra.com/archive/2014/11/what-is-azure-data-factory/

HOTSPOT

You plan to deploy an Azure Data Factory pipeline that will perform the following:

– Move data from on-premises to the cloud.

– Consume Azure Cognitive Services APIs.

You need to recommend which technologies the pipeline should use. The solution must minimize custom code.

What should you include in the recommendation? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Self-hosted Integration Runtime A self-hosted IR is capable of running copy activity between a cloud data stores and a data store in private network.

Not Azure-SSIS Integration Runtime, as you would need to write custom code.

Box 2: Azure Logic Apps

Azure Logic Apps helps you orchestrate and integrate different services by providing 100+ ready-to-use connectors, ranging from on-premises SQL Server or SAP to Microsoft Cognitive Services.

Incorrect:

Not Azure API Management: Use Azure API Management as a turnkey solution for publishing APIs to external and internal customers.

References: https://docs.microsoft.com/en-us/azure/data-factory/concepts-integration-runtime

https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-examples-and-scenarios

HOTSPOT

You need to build an interactive website that will accept uploaded images, and then ask a series of predefined questions based on each image.

Which services should you use? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Azure Bot Service

Box 2: Computer Vision

The Computer Vision Analyze an image feature, returns information about visual content found in an image. Use tagging, domain-specific models, and descriptions in four languages to identify content and label it with confidence. Use Object Detection to get location of thousands of objects within an image. Apply the adult/racy settings to help you detect potential adult content. Identify image types and color schemes in pictures.

References: https://azure.microsoft.com/en-us/services/cognitive-services/computer-vision/

You are designing an AI solution that will analyze millions of pictures.

You need to recommend a solution for storing the pictures. The solution must minimize costs.

Which storage solution should you recommend?

- A . an Azure Data Lake store

- B . Azure File Storage

- C . Azure Blob storage

- D . Azure Table storage

C

Explanation:

Data Lake will be a bit more expensive although they are in close range of each other. Blob storage has more options for pricing depending upon things like how frequently you need to access your data (cold vs hot storage).

Reference: http://blog.pragmaticworks.com/azure-data-lake-vs-azure-blob-storage-in-data-warehousing

You are configuring data persistence for a Microsoft Bot Framework application. The application requires a structured NoSQL cloud data store.

You need to identify a storage solution for the application. The solution must minimize costs.

What should you identify?

- A . Azure Blob storage

- B . Azure Cosmos DB

- C . Azure HDInsight

- D . Azure Table storage

D

Explanation:

Table Storage is a NoSQL key-value store for rapid development using massive semi-structured datasets You can develop applications on Cosmos DB using popular NoSQL APIs.

Both services have a different scenario and pricing model.

While Azure Storage Tables is aimed at high capacity on a single region (optional secondary read only region but no failover), indexing by PK/RK and storage-optimized pricing; Azure Cosmos DB Tables aims for high throughput (single-digit millisecond latency), global distribution (multiple failover), SLA-backed predictive performance with automatic indexing of each attribute/property and a pricing model focused on throughput.

References: https://db-engines.com/en/system/Microsoft+Azure+Cosmos+DB%3BMicrosoft+Azure+Table+Storage

You have an Azure Machine Learning model that is deployed to a web service.

You plan to publish the web service by using the name ml.contoso.com. You need to recommend a solution to ensure that access to the web service is encrypted.

Which three actions should you recommend? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Generate a shared access signature (SAS)

- B . Obtain an SSL certificate

- C . Add a deployment slot

- D . Update the web service

- E . Update DNS

- F . Create an Azure Key Vault

BDE

Explanation:

The process of securing a new web service or an existing one is as follows:

You have an Azure Machine Learning model that is deployed to a web service.

You plan to publish the web service by using the name ml.contoso.com. You need to recommend a solution to ensure that access to the web service is encrypted.

Which three actions should you recommend? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Generate a shared access signature (SAS)

- B . Obtain an SSL certificate

- C . Add a deployment slot

- D . Update the web service

- E . Update DNS

- F . Create an Azure Key Vault

BDE

Explanation:

The process of securing a new web service or an existing one is as follows:

You have an Azure Machine Learning model that is deployed to a web service.

You plan to publish the web service by using the name ml.contoso.com. You need to recommend a solution to ensure that access to the web service is encrypted.

Which three actions should you recommend? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Generate a shared access signature (SAS)

- B . Obtain an SSL certificate

- C . Add a deployment slot

- D . Update the web service

- E . Update DNS

- F . Create an Azure Key Vault

BDE

Explanation:

The process of securing a new web service or an existing one is as follows:

You have an Azure Machine Learning model that is deployed to a web service.

You plan to publish the web service by using the name ml.contoso.com. You need to recommend a solution to ensure that access to the web service is encrypted.

Which three actions should you recommend? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Generate a shared access signature (SAS)

- B . Obtain an SSL certificate

- C . Add a deployment slot

- D . Update the web service

- E . Update DNS

- F . Create an Azure Key Vault

BDE

Explanation:

The process of securing a new web service or an existing one is as follows:

You have an Azure Machine Learning model that is deployed to a web service.

You plan to publish the web service by using the name ml.contoso.com. You need to recommend a solution to ensure that access to the web service is encrypted.

Which three actions should you recommend? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Generate a shared access signature (SAS)

- B . Obtain an SSL certificate

- C . Add a deployment slot

- D . Update the web service

- E . Update DNS

- F . Create an Azure Key Vault

BDE

Explanation:

The process of securing a new web service or an existing one is as follows:

Your company recently deployed several hardware devices that contain sensors.

The sensors generate new data on an hourly basis. The data generated is stored on-premises and retained for several years. During the past two months, the sensors generated 300 GB of data. You plan to move the data to Azure and then perform advanced analytics on the data. You need to recommend an Azure storage solution for the data.

Which storage solution should you recommend?

- A . Azure Queue storage

- B . Azure Cosmos DB

- C . Azure Blob storage

- D . Azure SQL Database

C

Explanation:

References: https://docs.microsoft.com/en-us/azure/architecture/data-guide/technology-choices/data-storage

You plan to design an application that will use data from Azure Data Lake and perform sentiment analysis by using Azure Machine Learning algorithms.

The developers of the application use a mix of Windows- and Linux-based environments. The developers contribute to shared GitHub repositories.

You need all the developers to use the same tool to develop the application.

What is the best tool to use? More than one answer choice may achieve the goal.

- A . Microsoft Visual Studio Code

- B . Azure Notebooks

- C . Azure Machine Learning Studio

- D . Microsoft Visual Studio

C

Explanation:

References: https://github.com/MicrosoftDocs/azure-docs/blob/master/articles/machine-learning/studio/algorithmchoice.md

You have several AI applications that use an Azure Kubernetes Service (AKS) cluster. The cluster supports a maximum of 32 nodes.

You discover that occasionally and unpredictably, the application requires more than 32 nodes.

You need to recommend a solution to handle the unpredictable application load.

Which scaling method should you recommend?

- A . horizontal pod autoscaler

- B . cluster autoscaler

- C . manual scaling

- D . Azure Container Instances

B

Explanation:

B: To keep up with application demands in Azure Kubernetes Service (AKS), you may need to adjust the number of nodes that run your workloads. The cluster autoscaler component can watch for pods in your cluster that can’t be scheduled because of resource constraints. When issues are detected, the number of nodes is increased to meet the application demand. Nodes are also regularly checked for a lack of running pods, with the number of nodes then decreased as needed. This ability to automatically scale up or down the number of nodes in your AKS cluster lets you run an efficient, cost-effective cluster.

Reference: https://docs.microsoft.com/en-us/azure/aks/cluster-autoscaler

You deploy an infrastructure for a big data workload.

You need to run Azure HDInsight and Microsoft Machine Learning Server. You plan to set the RevoScaleR compute contexts to run rx function calls in parallel.

What are three compute contexts that you can use for Machine Learning Server? Each correct answer presents a complete solution. NOTE: Each correct selection is worth one point.

- A . SQL

- B . Spark

- C . local parallel

- D . HBase

- E . local sequential

ABC

Explanation:

Remote computing is available for specific data sources on selected platforms. The following tables document the supported combinations.

– RxInSqlServer, sqlserver: Remote compute context. Target server is a single database node (SQL Server 2016 R Services or SQL Server 2017 Machine Learning Services). Computation is parallel, but not distributed.

– RxSpark, spark: Remote compute context. Target is a Spark cluster on Hadoop.

– RxLocalParallel, localpar: Compute context is often used to enable controlled, distributed computations relying on instructions you provide rather than a built-in scheduler on Hadoop. You can use compute context for manual distributed computing.

References: https://docs.microsoft.com/en-us/machine-learning-server/r/concept-what-is-compute-context

Your company has 1,000 AI developers who are responsible for provisioning environments in Azure.

You need to control the type, size, and location of the resources that the developers can provision.

What should you use?

- A . Azure Key Vault

- B . Azure service principals

- C . Azure managed identities

- D . Azure Security Center

- E . Azure Policy

You are designing an AI solution in Azure that will perform image classification.

You need to identify which processing platform will provide you with the ability to update the logic over time. The solution must have the lowest latency for inferencing without having to batch.

Which compute target should you identify?

- A . graphics processing units (GPUs)

- B . field-programmable gate arrays (FPGAs)

- C . central processing units (CPUs)

- D . application-specific integrated circuits (ASICs)

B

Explanation:

FPGAs, such as those available on Azure, provide performance close to ASICs. They are also flexible and reconfigurable over time, to implement new logic.

Incorrect Answers:

D: ASICs are custom circuits, such as Google’s TensorFlow Processor Units (TPU), provide the highest efficiency. They can’t be reconfigured as your needs change.

References: https://docs.microsoft.com/en-us/azure/machine-learning/service/concept-accelerate-with-fpgas

You have a solution that runs on a five-node Azure Kubernetes Service (AKS) cluster. The cluster uses an N-series virtual machine.

An Azure Batch AI process runs once a day and rarely on demand.

You need to recommend a solution to maintain the cluster configuration when the cluster is not in use. The solution must not incur any compute costs.

What should you include in the recommendation?

- A . Downscale the cluster to one node

- B . Downscale the cluster to zero nodes

- C . Delete the cluster

A

Explanation:

An AKS cluster has one or more nodes.

References: https://docs.microsoft.com/en-us/azure/aks/concepts-clusters-workloads

HOTSPOT

You are designing an AI solution that will be used to find buildings in aerial pictures.

Users will upload the pictures to an Azure Storage account. A separate JSON document will contain for the pictures.

The solution must meet the following requirements:

– Store metadata for the pictures in a data store.

– Run a custom vision Azure Machine Learning module to identify the buildings in a picture and the position of the buildings’ edges.

– Run a custom mathematical module to calculate the dimensions of the buildings in a picture based on the metadata and data from the vision module.

You need to identify which Azure infrastructure services are used for each component of the AI workflow. The solution must execute as quickly as possible.

What should you identify? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Azure Blob Storage

Containers and blobs support custom metadata, represented as HTTP headers.

Box 2: NV

The NV-series enables powerful remote visualisation workloads and other graphics-intensive applications backed by the NVIDIA Tesla M60 GPU.

Note: The N-series is a family of Azure Virtual Machines with GPU capabilities. GPUs are ideal for compute and graphics-intensive workloads, helping customers to fuel innovation through scenarios like high-end remote visualisation, deep learning and predictive analytics.

Box 3: F

F-series VMs feature a higher CPU-to-memory ratio. Example use cases include batch processing, web servers, analytics and gaming.

Incorrect:

A-series VMs have CPU performance and memory configurations best suited for entry level workloads like development and test.

References: https://azure.microsoft.com/en-in/pricing/details/virtual-machines/series/

Your company has recently deployed 5,000 Internet-connected sensors for a planned AI solution.

You need to recommend a computing solution to perform a real-time analysis of the data generated by the sensors.

Which computing solution should you recommend?

- A . an Azure HDInsight Storm cluster

- B . Azure Notification Hubs

- C . an Azure HDInsight Hadoop cluster

- D . an Azure HDInsight R cluster

HOTSPOT

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You plan to deploy an application that will perform image recognition. The application will store image data in two Azure Blob storage stores named Blob1 and Blob2.

You need to recommend a security solution that meets the following requirements:

– Access to Blob1 must be controlled by using a role.

– Access to Blob2 must be time-limited and constrained to specific operations.

What should you recommend using to control access to each blob store? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

References: https://docs.microsoft.com/en-us/azure/storage/common/storage-auth

You deploy an application that performs sentiment analysis on the data stored in Azure Cosmos DB.

Recently, you loaded a large amount of data to the database. The data was for a customer named Contoso, Ltd.

You discover that queries for the Contoso data are slow to complete, and the queries slow the entire application.

You need to reduce the amount of time it takes for the queries to complete. The solution must minimize costs.

What is the best way to achieve the goal? More than one answer choice may achieve the goal. Select the BEST answer.

- A . Change the request units.

- B . Change the partitioning strategy.

- C . Change the transaction isolation level.

- D . Migrate the data to the Cosmos DB database.

B

Explanation:

Throughput provisioned for a container is divided evenly among physical partitions.

Incorrect: Not A: Increasing request units would also improve throughput, but at a cost.

Reference: https://docs.microsoft.com/en-us/azure/architecture/best-practices/data-partitioning

You have an AI application that uses keys in Azure Key Vault. Recently, a key used by the application was deleted accidentally and was unrecoverable. You need to ensure that if a key is deleted, it is retained in the key vault for 90 days.

Which two features should you configure? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . The expiration date on the keys

- B . Soft delete

- C . Purge protection

- D . Auditors

- E . The activation date on the keys

BC

Explanation:

References: https://docs.microsoft.com/en-us/azure/architecture/best-practices/data-partitioning

DRAG DROP

You are designing an AI solution that will analyze media data. The data will be stored in Azure Blob storage.

You need to ensure that the storage account is encrypted by using a key generated by the hardware security module (HSM) of your company.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Explanation:

References:

https://docs.microsoft.com/en-us/azure/storage/common/storage-encryption-keys-portal https://docs.microsoft.com/en-us/azure/key-vault/key-vault-hsm-protected-keys

You plan to implement a new data warehouse for a planned AI solution.

You have the following information regarding the data warehouse:

– The data files will be available in one week.

– Most queries that will be executed against the data warehouse will be ad-hoc queries.

– The schemas of data files that will be loaded to the data warehouse will change often.

– One month after the planned implementation, the data warehouse will contain 15 TB of data.

You need to recommend a database solution to support the planned implementation.

What two solutions should you include in the recommendation? Each correct answer is a complete solution. NOTE: Each correct selection is worth one point.

- A . Apache Hadoop

- B . Apache Spark

- C . A Microsoft Azure SQL database

- D . An Azure virtual machine that runs Microsoft SQL Server

You need to build a solution to monitor Twitter.

The solution must meet the following requirements:

– Send an email message to the marketing department when negative Twitter messages are detected.

– Run sentiment analysis on Twitter messages that mention specific tags.

– Use the least amount of custom code possible.

Which two services should you include in the solution? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Azure Databricks

- B . Azure Stream Analytics

- C . Azure Functions

- D . Azure Cognitive Services

- E . Azure Logic Apps

BE

Explanation:

References:

https://docs.microsoft.com/en-us/azure/stream-analytics/streaming-technologies

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-twitter-sentiment-analysis-trends

HOTSPOT You need to configure security for an Azure Machine Learning service used by groups of data scientists.

The groups must have access to only their own experiments and must be able to grant permissions to the members of their team.

What should you do? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

References:

https://docs.microsoft.com/en-us/machine-learning-server/operationalize/configure-roles#how-are-rolesassigned

https://docs.microsoft.com/en-us/azure/machine-learning/service/how-to-assign-roles

You plan to build an application that will perform predictive analytics. Users will be able to consume the application data by using Microsoft Power BI or a custom website.

You need to ensure that you can audit application usage.

Which auditing solution should you use?

- A . Azure Storage Analytics

- B . Azure Application Insights

- C . Azure diagnostics logs

- D . Azure Active Directory (Azure AD) reporting

HOTSPOT

You need to build a sentiment analysis solution that will use input data from JSON documents and PDF documents. The JSON documents must be processed in batches and aggregated.

Which storage type should you use for each file type? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Azure Blob Storage

The following technologies are recommended choices for batch processing solutions in Azure.

Data storage

– Azure Storage Blob Containers. Many existing Azure business processes already use Azure blob storage, making this a good choice for a big data store.

– Azure Data Lake Store. Azure Data Lake Store offers virtually unlimited storage for any size of file, and extensive security options, making it a good choice for extremely large-scale big data solutions that require a centralized store for data in heterogeneous formats.

Box 2: Azure Blob Storage

References:

https://docs.microsoft.com/en-us/azure/architecture/data-guide/big-data/batch-processing

https://docs.microsoft.com/bs-latn-ba/azure/storage/blobs/storage-blobs-introduction

You are developing a mobile application that will perform optical character recognition (OCR) from photos.

The application will annotate the photos by using metadata, store the photos in Azure Blob storage, and then score the photos by using an Azure Machine Learning model.

What should you use to process the data?

- A . Azure Event Hubs

- B . Azure Functions

- C . Azure Stream Analytics

- D . Azure Logic Apps

- E . Azure Batch AI

B

Explanation:

By using Azure services such as the Computer Vision API and Azure Functions, companies can eliminate the need to manage individual servers, while reducing costs and leveraging the expertise that Microsoft has already developed around processing images with Cognitive Services.

Incorrect:

Not E: The Azure Batch AI service was retired in 2019 and was replaced with Azure Machine Learning Compute.

References: https://docs.microsoft.com/en-us/azure/architecture/example-scenario/ai/intelligent-appsimage-processing

You create an Azure Cognitive Services resource.

A data scientist needs to call the resource from Azure Logic Apps by using the generic HTTP connector.

Which two values should you provide to the data scientist? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Endpoint URL

- B . Resource name

- C . Access key

- D . Resource group name

- E . Subscription ID

You plan to deploy an AI solution that tracks the behavior of 10 custom mobile apps. Each mobile app has several thousand users.

You need to recommend a solution for real-time data ingestion for the data originating from the mobile app users.

Which Microsoft Azure service should you include in the recommendation?

- A . Azure Event Hubs

- B . Azure Service Bus queries

- C . Azure Service Bus topics and subscriptions

- D . Apache Storm on Azure HDInsight

A

Explanation:

References: https://docs.microsoft.com/en-in/azure/event-hubs/event-hubs-about

You plan to deploy Azure IoT Edge devices that will each store more than 10,000 images locally and classify the images by using a Custom Vision Service classifier.

Each image is approximately 5 MB.

You need to ensure that the images persist on the devices for 14 days.

What should you use?

- A . The device cache

- B . Azure Blob storage on the IoT Edge devices

- C . Azure Stream Analytics on the IoT Esge devices

- D . Microsoft SQL Server on the IoT Edge devices

B

Explanation:

References: https://docs.microsoft.com/en-us/azure/iot-edge/how-to-store-data-blob

Your company is building custom models that integrate into microservices architecture on Azure Kubernetes Services (AKS).

The model is built by using Python and published to AKS.

You need to update the model and enable Azure Application Insights for the model.

What should you use?

- A . the Azure CLI

- B . MLNET Model Builder

- C . the Azure Machine Learning SDK

- D . the Azure portal

C

Explanation:

You can set up Azure Application Insights for Azure Machine Learning.

Application Insights gives you the opportunity to monitor:

– Request rates, response times, and failure rates.

– Dependency rates, response times, and failure rates.

– Exceptions.

Requirements include an Azure Machine Learning workspace, a local directory that contains your scripts, and the Azure Machine Learning SDK for Python installed.

References: https://docs.microsoft.com/bs-latn-ba/azure/machine-learning/service/how-to-enable-app-insights

You are designing an AI solution that will analyze millions of pictures by using Azure HDInsight Hadoop cluster. You need to recommend a solution for storing the pictures. The solution must minimize costs.

Which storage solution should you recommend?

- A . Azure Table storage

- B . Azure File Storage

- C . Azure Data Lake Storage Gen2

- D . Azure Data Lake Storage Gen1

D

Explanation:

Azure Data Lake Storage Gen1 is adequate and less expensive compared to Gen2.

References: https://visualbi.com/blogs/microsoft/introduction-azure-data-lake-gen2/

You deploy an application that performs sentiment analysis on the data stored in Azure Cosmos DB.

Recently, you loaded a large amount of data to the database. The data was for a customer named Contoso, Ltd. You discover that queries for the Contoso data are slow to complete, and the queries slow the entire application.

You need to reduce the amount of time it takes for the queries to complete. The solution must minimize costs.

What should you do? More than one answer choice may achieve the goal. (Choose two.)

- A . Change the request units.

- B . Change the partitioning strategy.

- C . Change the transaction isolation level.

- D . Migrate the data to the Cosmos DB database.

AB

Explanation:

Increasing request units would improve throughput, but at a cost. Throughput provisioned for a container is divided evenly among physical partitions.

References: https://docs.microsoft.com/en-us/azure/architecture/best-practices/data-partitioning

Your company has several AI solutions and bots. You need to implement a solution to monitor the utilization of the bots. The solution must ensure that analysts at the company can generate dashboards to review the utilization.

What should you include in the solution?

- A . Azure Application Insights

- B . Azure Data Explorer

- C . Azure Logic Apps

- D . Azure Monitor

A

Explanation:

Bot Analytics. Analytics is an extension of Application Insights. Application Insights provides service-level and instrumentation data like traffic, latency, and integrations. Analytics provides conversation-level reporting on user, message, and channel data.

References: https://docs.microsoft.com/en-us/azure/bot-service/bot-service-manage-analytics

Your plan to design a bot that will be hosted by using Azure Bot Service.

Your company identifies the following compliance requirements for the bot:

– Payment Card Industry Data Security Standards (PCI DSS)

– General Data Protection Regulation (GDPR)

– ISO 27001

You need to identify which compliance requirements are met by hosting the bot in the bot service.

What should you identify?

- A . PCI DSS only

- B . PCI DSS, ISO 27001, and GDPR

- C . ISO 27001 only

- D . GDPR only

B

Explanation:

Azure Bot service is compliant with ISO 27001:2013, ISO 27019:2014, SOC 1 and 2, Payment Card Industry Data Security Standard (PCI DSS), and Health Insurance Portability and Accountability Act Business Associate Agreement (HIPAA BAA).

Microsoft products and services, including Azure Bot Service, are available today to help you meet the GDPR requirements.

References:

https://docs.microsoft.com/en-us/azure/bot-service/bot-service-compliance

https://blog.botframework.com/2018/04/23/general-data-protection-regulation-gdpr/

HOTSPOT

You plan to use Azure Cognitive Services to provide the development team at your company with the ability to create intelligent apps without having direct AI or data science skills.

The company identifies the following requirements for the planned Cognitive Services deployment:

– Provide support for the following languages: English, Portuguese, and German.

– Perform text analytics to derive a sentiment score.

Which Cognitive Service service should you deploy for each requirement? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Text Analytics

The Language Detection feature of the Azure Text Analytics REST API evaluates text input for each

document and returns language identifiers with a score that indicates the strength of the analysis.

Box 2: Language API

References: https://docs.microsoft.com/en-us/azure/cognitive-services/text-analytics/how-tos/text-analytics-how-tolanguage-detection

https://docs.microsoft.com/en-us/azure/azure-databricks/databricks-sentiment-analysis-cognitive-services

HOTSPOT

You plan to deploy the Text Analytics and Computer Vision services. The Azure Cognitive Services will be deployed to the West US and East Europe Azure regions. You need to identify the minimum number of service endpoints and API keys required for the planned deployment.

What should you identify? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: 2

After creating a Cognitive Service resource in the Azure portal, you’ll get an endpoint and a key for authenticating your applications. You can access Azure Cognitive Services through two different resources: A multi-service resource, or a single-service one. Multi-service resource: Access multiple Azure Cognitive Services with a single key and endpoint.

Note: You need a key and endpoint for a Text Analytics resource. Azure Cognitive Services are represented by Azure resources that you subscribe to. Each request must include your access key and an HTTP endpoint. The endpoint specifies the region you chose during sign up, the service URL, and a resource used on the request

Box 2: 2

You need at least one key per region.

References: https://docs.microsoft.com/en-us/azure/cognitive-services/cognitive-services-apis-create-account

Your company plans to create a mobile app that will be used by employees to query the employee handbook.

You need to ensure that the employees can query the handbook by typing or by using speech.

Which core component should you use for the app?

- A . Language Understanding (LUIS)

- B . QnA Maker

- C . Text Analytics

- D . Azure Search

You have an existing Language Understanding (LUIS) model for an internal bot.

You need to recommend a solution to add a meeting reminder functionality to the bot by using a prebuilt model. The solution must minimize the size of the model.

Which component of LUIS should you recommend?

- A . domain

- B . intents

- C . entities

You have an on-premises repository that contains 5,000 videos. The videos feature demonstrations of the products sold by your company.

The company’s customers plan to search the videos by using the name of the product demonstrated in each video.

You need to build a custom search tool for the customers.

What should you do first?

- A . Deploy an Azure Media Services resource.

- B . Create an Azure Storage account and a blob container.

- C . Create an Azure Search resource.

- D . Deploy a Custom Vision API service.

Your company manages a sports team.

The company sets up a video booth to record messages for the team.

Before replaying the messages on a video screen, you need to generate captions for the messages and check the sentiment of the video to ensure that only positive messages are played.

Which Azure Cognitive Services service should you use?

- A . Language Understanding (LUIS)

- B . Speaker Recognition

- C . Custom Vision

- D . Video Indexer

D

Explanation:

Video Indexer includes Audio transcription: Converts speech to text in 12 languages and allows extensions. Supported languages include English, Spanish, French, German, Italian, Mandarin Chinese, Japanese, Arabic, Russian, Portuguese, Hindi, and Korean.

When indexing by one channel, partial result for those models will be available, such as sentiment analysis: Identifies positive, negative, and neutral sentiments from speech and visual text.

Reference: https://docs.microsoft.com/en-us/azure/media-services/video-indexer/video-indexer-overview

HOTSPOT

You plan to build an app that will provide users with the ability to dictate messages and convert the messages into text.

You need to recommend a solution to meet the following requirements for the app:

– Must be able to transcribe streaming dictated messages that are longer than 15 seconds.

– Must be able to upload existing recordings to Azure Blob storage to be transcribed later.

Which solution should you recommend for each requirement? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: The Speech SDK

The Speech SDK is not limited to 15 seconds.

Box 2: Batch Transcription API

Batch transcription is a set of REST API operations that enables you to transcribe a large amount of audio in storage. You can point to audio files with a shared access signature (SAS) URI and asynchronously receive transcription results. With the new v3.0 API, you have the choice of transcribing one or more audio files, or process a whole storage container.

Asynchronous speech-to-text transcription is just one of the features.

Reference:

https://github.com/Azure-Samples/cognitive-services-speech-sdk/issues/13

https://docs.microsoft.com/en-us/azure/cognitive-services/speech-service/batch-transcription

Your company plans to monitor twitter hashtags, and then to build a graph of connected people and places that contains the associated sentiment.

The monitored hashtags use several languages, but the graph will be displayed in English.

You need to recommend the required Azure Cognitive Services endpoints for the planned graph.

Which Cognitive Services endpoints should you recommend?

- A . Language Detection, Content Moderator, and Key Phrase Extraction

- B . Translator Text, Content Moderator, and Key Phrase Extraction

- C . Language Detection, Sentiment Analysis, and Key Phase Extraction

- D . Translator Text, Sentiment Analysis, and Named Entity Recognition

C

Explanation:

Sentiment analysis, which is also called opinion mining, uses social media analytics tools to determine attitudes toward a product or idea.

Translator Text: Translate text in real time across more than 60 languages, powered by the latest innovations in machine translation.

The Key Phrase Extraction skill evaluates unstructured text, and for each record, returns a list of key phrases. This skill uses the machine learning models provided by Text Analytics in Cognitive Services. This capability is useful if you need to quickly identify the main talking points in the record. For example, given input text "The food was delicious and there were wonderful staff", the service returns "food" and "wonderful staff".

Reference:

https://docs.microsoft.com/en-us/azure/cognitive-services/text-analytics/how-tos/text-analytics-how-toentity-linking

https://docs.microsoft.com/en-us/azure/search/cognitive-search-skill-keyphrases

HOTSPOT

You plan to create an intelligent bot to handle internal user chats to the help desk of your company.

The bot has the following requirements:

– Must be able to interpret what a user means.

– Must be able to perform multiple tasks for a user.

– Must be able to answer questions from an existing knowledge base.

You need to recommend which solutions meet the requirements.

Which solution should you recommend for each requirement? To answer, drag the appropriate solutions to the correct requirements. Each solution may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: The Language Understanding (LUIS) service

Language Understanding (LUIS) is a cloud-based API service that applies custom machine-learning intelligence to a user’s conversational, natural language text to predict overall meaning, and pull out relevant, detailed information.

Box 2: Text Analytics API

The Text Analytics API is a cloud-based service that provides advanced natural language processing over raw text, and includes four main functions: sentiment analysis, key phrase extraction, named entity recognition, and language detection.

Box 3: The QnA Maker service

QnA Maker is a cloud-based Natural Language Processing (NLP) service that easily creates a natural conversational layer over your data. It can be used to find the most appropriate answer for any given natural language input, from your custom knowledge base (KB) of information.

Incorrect Answers:

Dispatch tool library:

If a bot uses multiple LUIS models and QnA Maker knowledge bases (knowledge bases), you can use Dispatch tool to determine which LUIS model or QnA Maker knowledge base best matches the user input. The dispatch tool does this by creating a single LUIS app to route user input to the correct model.

Reference:

https://docs.microsoft.com/en-us/azure/bot-service/bot-builder-tutorial-dispatch

https://docs.microsoft.com/en-us/azure/cognitive-services/qnamaker/overview/overview

You are developing a mobile application that will perform optical character recognition (OCR) from photos.

The application will annotate the photos by using metadata, store the photos in Azure Blob storage, and then score the photos by using an Azure Machine Learning model.

What should you use to process the data?

- A . Azure Event Hubs

- B . Azure Functions

- C . Azure Stream Analytics

- D . Azure Logic Apps

You are designing an AI solution that will analyze millions of pictures by using Azure HDInsight Hadoop cluster.

You need to recommend a solution for storing the pictures. The solution must minimize costs.

Which storage solution should you recommend?

- A . Azure Table storage

- B . Azure File Storage

- C . Azure Data Lake Storage Gen2

- D . Azure Databricks File System

C

Explanation:

Azure Data Lake Store is optimized for storing large amounts of data for reporting and analytical and is geared towards storing data in its native format, making it a great store for non-relational data.

Reference: https://stackify.com/store-data-azure-understand-azure-data-storage-options/

You are designing a real-time speech-to-text AI feature for an Android mobile app. The feature will stream data to the Speech service.

You need to recommend which audio format to use to serialize the audio. The solution must minimize the amount of data transferred to the cloud.

What should you recommend?

- A . MP3

- B . WAV/PCM

- C . MP4a

B

Explanation:

Currently, only the following configuration is supported: Audio samples in PCM format, one channel, 16 bits per sample, 8000 or 16000 samples per second (16000 or 32000 bytes per second), two block align (16 bit including padding for a sample).

Reference: https://docs.microsoft.com/en-us/azure/cognitive-services/speech-service/how-to-use-audio-input-streams

Testlet 2

Overview

Contoso, Ltd. has an office in New York to serve its North American customers and an office in Paris to serve its European customers.

Existing Environment

Infrastructure

Each office has a small data center that hosts Active Directory services and a few off-the-shelf software solutions used by internal users.

The network contains a single Active Directory forest that contains a single domain named contoso.com. Azure Active Directory (Azure AD) Connect is used to extend identity management to Azure.

The company has an Azure subscription. Each office has an Azure ExpressRoute connection to the subscription. The New York office connects to a virtual network hosted in the US East 2 Azure region. The Paris office connects to a virtual network hosted in the West Europe Azure region.

The New York office has an Azure Stack Development Kit (ASDK) deployment that is used for development and testing.

Current Business Model

Contoso has a web app named Bookings hosted in an App Service Environment (ASE). The ASE is in the virtual network in the East US 2 region. Contoso employees and customers use Bookings to reserve hotel rooms.

Data Environment

Bookings connects to a Microsoft SQL Server database named hotelDB in the New York office.

The database has a view named vwAvailability that consolidates columns from the tables named Hotels, Rooms, and RoomAvailability. The database contains data that was collected during the last 20 years.

Problem Statements

Contoso identifies the following issues with its current business model:

– European users report that access to Booking is slow, and they lose customers who must wait on the phone while they search for available rooms.

– Users report that Bookings was unavailable during an outage in the New York data center for more than 24 hours.

Requirements

Contoso identifies the following issues with its current business model:

– European users report that access to Bookings is slow, and they lose customers who must wait on the phone while they search for available rooms.

– Users report that Bookings was unavailable during on outage in the New York data center for more than 24 hours.

Business Goals

Contoso wants to provide a new version of the Bookings app that will provide a highly available, reliable service for booking travel packages by interacting with a chatbot named Butler.

Contoso plans to move all production workloads to the cloud.

Technical requirements

Contoso identifies the following technical requirements:

– Data scientists must test Butler by using ASDK.

– Whenever possible, solutions must minimize costs.

– Butler must greet users by name when they first connect.

– Butler must be able to handle up to 10,000 messages a day.

– Butler must recognize the users’ intent based on basic utterances.

– All configurations to the Azure Bot Service must be logged centrally.

– Whenever possible, solutions must use the principle of least privilege.

– Internal users must be able to access Butler by using Microsoft Skype for Business.

– The new Bookings app must provide a user interface where users can interact with Butler.

– Users in an Azure AD group named KeyManagers must be able to manage keys for all Azure Cognitive Services.

– Butler must provide users with the ability to reserve a room, cancel a reservation, and view existing reservations.

– The new Bookings app must be available to users in North America and Europe if a single data center or Azure region fails.

– For continuous improvement, you must be able to test Butler by sending sample utterances and comparing the chatbot’s responses to the actual intent.

– You must maintain relationships between data after migration.

You need to recommend a data storage solution that meets the technical requirements.

What is the best data storage solution to recommend? More than one answer choice may achieve the goal. Select the BEST answer.

- A . Azure Databricks

- B . Azure SQL Database

- C . Azure Table storage

- D . Azure Cosmos DB

D

Explanation:

Reference:

https://docs.microsoft.com/en-us/azure/architecture/example-scenario/ai/commerce-chatbot

https://docs.microsoft.com/en-us/azure/cosmos-db/introduction

Question Set 1

Your company has a data team of Scala and R experts.

You plan to ingest data from multiple Apache Kafka streams.

You need to recommend a processing technology to broker messages at scale from Kafka streams to Azure Storage.

What should you recommend?

- A . Azure Databricks

- B . Azure Functions

- C . Azure HDInsight with Apache Storm

- D . Azure HDInsight with Microsoft Machine Learning Server

You are designing an AI application that will use an azure Machine Learning Studio experiment.

The source data contains more than 200 TB of relational tables. The experiment will run once a month.

You need to identify a data storage solution for the application. The solution must minimize compute costs.

Which data storage solution should you identify?

- A . Azure Database for MySQL

- B . Azure SQL Database

- C . Azure SQL Data Warehouse

HOTSPOT

You are designing a solution that will analyze bank transactions in real time. The transactions will be evaluated by using an algorithm and classified into one of five groups. The transaction data will be enriched with information taken from Azure SQL Database before the transactions are sent to the classification process. The enrichment process will require custom code. Data from different banks will require different stored procedures.

You need to develop a pipeline for the solution.

Which components should you use for data ingestion and data preparation? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

References: https://docs.microsoft.com/bs-latn-ba/azure/architecture/example-scenario/data/fraud-detection

DRAG DROP

You are designing an Azure Batch AI solution that will be used to train many different Azure Machine Learning models.

The solution will perform the following:

– Image recognition

– Deep learning that uses convolutional neural networks.

You need to select a compute infrastructure for each model. The solution must minimize the processing time.

What should you use for each model? To answer, drag the appropriate compute infrastructures to the correct models. Each compute infrastructure may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

Explanation:

References: https://docs.microsoft.com/en-us/azure/virtual-machines/windows/sizes-gpu