Given the data limitations and the complexity of the problem, which of the following approaches is the MOST LIKELY to determine the feasibility of an ML solution and guide your next steps?

You are a data scientist at a healthcare startup tasked with developing a machine learning model to predict the likelihood of patients developing a specific chronic disease within the next five years. The dataset available includes patient demographics, medical history, lab results, and lifestyle factors, but it is relatively small, with only 1,000 records. Additionally, the dataset has missing values in some critical features, and the class distribution is highly imbalanced, with only 5% of patients labeled as having developed the disease.

Given the data limitations and the complexity of the problem, which of the following approaches is the MOST LIKELY to determine the feasibility of an ML solution and guide your next steps?

A. Proceed with training a deep neural network (DNN) model using the available data, as DNNs can handle small datasets by learning complex patterns

B. Increase the dataset size by generating synthetic data and then train a simple logistic regression model to avoid overfitting

C. Conduct exploratory data analysis (EDA) to understand the data distribution, address missing values, and assess the class imbalance before determining if an ML solution is feasible

D. Immediately apply an oversampling technique to balance the dataset, then train an XGBoost model to maximize performance on the minority class

Answer: C

Explanation:

Correct option:

Conduct exploratory data analysis (EDA) to understand the data distribution, address missing values, and assess the class imbalance before determining if an ML solution is feasible

Conducting exploratory data analysis (EDA) is the most appropriate first step. EDA allows you to understand the data distribution, identify and address missing values, and assess the extent of the class imbalance. This process helps determine whether the available data is sufficient to build a reliable model and what preprocessing steps might be necessary.

Exploratory data analysis, feature engineering, and operationatizing your data ftow into your ML pipeline with Amazon

SageMaker Data Wrangter

by Phi Nguyen and Roberto Bruno Martins | on 11 DEC 2020 | in Amazon 5agef•1aker, Amazon SageMaker Data Wrangter, Artificial Intelligence | Permalink | Comments | r’+ Share

According to The State of Data Science 2020 survey, data management, exploratory data analysis (EDA), feature selection, and feature engineering accounts for more than 66% of a data scientist’s time (see the following diagram).

Data

loading 19o/

eta

cleansing

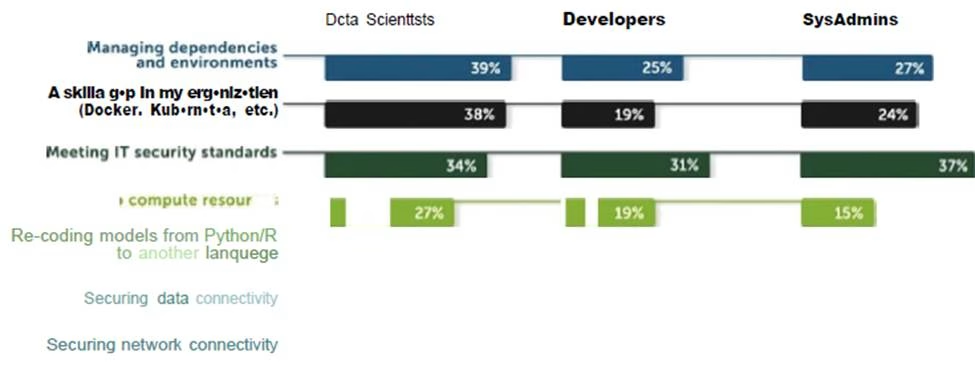

The same survey highlights that the top three biggest roadblocks to deploying a model in production are managing dependencies and environments, security, and skill gaps (see the following diagram).

via –

https://aws.amazon.com/blogs/machine-learning/exploratory-data-analysis-feature-engineering-and-operationalizing-your-data-flow-into-your-ml-pipeline-with-amazon-sagemaker-data-wrangler/

Incorrect options:

Proceed with training a deep neural network (DNN) model using the available data, as DNNs can handle small datasets by learning complex patterns – Training a deep neural network on a small dataset is not advisable, as DNNs typically require large amounts of data to perform well and avoid overfitting. Additionally, jumping directly to model training without assessing the data first may lead to poor results.

Increase the dataset size by generating synthetic data and then train a simple logistic regression model to avoid overfitting – While generating synthetic data can help increase the dataset size, it may introduce biases if not done carefully. Additionally, without first understanding the data through EDA, you risk applying the wrong strategy or misinterpreting the results.

Immediately apply an oversampling technique to balance the dataset, then train an XGBoost model to maximize performance on the minority class – Although oversampling can address class imbalance, it’s important to first understand the underlying data issues through EDA. Oversampling should not be the immediate next step without understanding the data quality, feature importance, and potential need for feature engineering.

References:

https://aws.amazon.com/blogs/machine-learning/exploratory-data-analysis-feature-engineering-and-oper

ationalizing-your-data-flow-into-your-ml-pipeline-with-amazon-sagemaker-data-wrangler/

https://aws.amazon.com/blogs/machine-learning/use-amazon-sagemaker-canvas-for-exploratory-data-a

nalysis/

Latest MLA-C01 Dumps Valid Version with 125 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund