Databricks Databricks Certified Professional Data Engineer Databricks Certified Data Engineer Professional Exam Online Training

Databricks Databricks Certified Professional Data Engineer Online Training

The questions for Databricks Certified Professional Data Engineer were last updated at Mar 04,2026.

- Exam Code: Databricks Certified Professional Data Engineer

- Exam Name: Databricks Certified Data Engineer Professional Exam

- Certification Provider: Databricks

- Latest update: Mar 04,2026

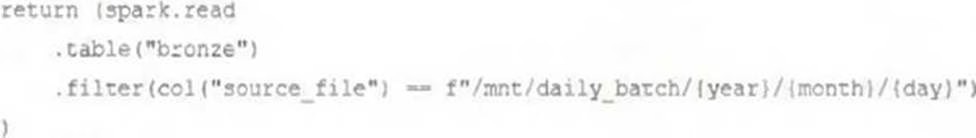

A nightly job ingests data into a Delta Lake table using the following code:

The next step in the pipeline requires a function that returns an object that can be used to manipulate new records that have not yet been processed to the next table in the pipeline.

Which code snippet completes this function definition?

A) def new_records():

B) return spark.readStream.table("bronze")

C) return spark.readStream.load("bronze")

D) return spark.read.option("readChangeFeed", "true").table ("bronze")

E)

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

A junior data engineer is working to implement logic for a Lakehouse table named silver_device_recordings. The source data contains 100 unique fields in a highly nested JSON structure.

The silver_device_recordings table will be used downstream to power several production monitoring dashboards and a production model. At present, 45 of the 100 fields are being used in at least one of these applications.

The data engineer is trying to determine the best approach for dealing with schema declaration given the highly-nested structure of the data and the numerous fields.

Which of the following accurately presents information about Delta Lake and Databricks that may impact their decision-making process?

- A . The Tungsten encoding used by Databricks is optimized for storing string data; newly-added native support for querying JSON strings means that string types are always most efficient.

- B . Because Delta Lake uses Parquet for data storage, data types can be easily evolved by just modifying file footer information in place.

- C . Human labor in writing code is the largest cost associated with data engineering workloads; as such, automating table declaration logic should be a priority in all migration workloads.

- D . Because Databricks will infer schema using types that allow all observed data to be processed, setting types manually provides greater assurance of data quality enforcement.

- E . Schema inference and evolution on .Databricks ensure that inferred types will always accurately match the data types used by downstream systems.

The data engineering team maintains the following code:

Assuming that this code produces logically correct results and the data in the source tables has been de-duplicated and validated, which statement describes what will occur when this code is executed?

- A . A batch job will update the enriched_itemized_orders_by_account table, replacing only those rows that have different values than the current version of the table, using accountID as the primary key.

- B . The enriched_itemized_orders_by_account table will be overwritten using the current valid version of data in each of the three tables referenced in the join logic.

- C . An incremental job will leverage information in the state store to identify unjoined rows in the source tables and write these rows to the enriched_iteinized_orders_by_account table.

- D . An incremental job will detect if new rows have been written to any of the source tables; if new rows are detected, all results will be recalculated and used to overwrite the enriched_itemized_orders_by_account table.

- E . No computation will occur until enriched_itemized_orders_by_account is queried; upon query materialization, results will be calculated using the current valid version of data in each of the three tables referenced in the join logic.

The data engineering team is migrating an enterprise system with thousands of tables and views into the Lakehouse. They plan to implement the target architecture using a series of bronze, silver, and gold tables. Bronze tables will almost exclusively be used by production data engineering workloads, while silver tables will be used to support both data engineering and machine learning workloads. Gold tables will largely serve business intelligence and reporting purposes. While personal identifying information (PII) exists in all tiers of data, pseudonymization and anonymization rules are in place for all data at the silver and gold levels.

The organization is interested in reducing security concerns while maximizing the ability to collaborate across diverse teams.

Which statement exemplifies best practices for implementing this system?

- A . Isolating tables in separate databases based on data quality tiers allows for easy permissions management through database ACLs and allows physical separation of default storage locations for managed tables.

- B . Because databases on Databricks are merely a logical construct, choices around database organization do not impact security or discoverability in the Lakehouse.

- C . Storinq all production tables in a single database provides a unified view of all data assets available throughout the Lakehouse, simplifying discoverability by granting all users view privileges on this database.

- D . Working in the default Databricks database provides the greatest security when working with managed tables, as these will be created in the DBFS root.

- E . Because all tables must live in the same storage containers used for the database they’re created in, organizations should be prepared to create between dozens and thousands of databases depending on their data isolation requirements.

The data architect has mandated that all tables in the Lakehouse should be configured as external Delta Lake tables.

Which approach will ensure that this requirement is met?

- A . Whenever a database is being created, make sure that the location keyword is used

- B . When configuring an external data warehouse for all table storage. leverage Databricks for all ELT.

- C . Whenever a table is being created, make sure that the location keyword is used.

- D . When tables are created, make sure that the external keyword is used in the create table statement.

- E . When the workspace is being configured, make sure that external cloud object storage has been mounted.

To reduce storage and compute costs, the data engineering team has been tasked with curating a series of aggregate tables leveraged by business intelligence dashboards, customer-facing applications, production machine learning models, and ad hoc analytical queries.

The data engineering team has been made aware of new requirements from a customer-facing application, which is the only downstream workload they manage entirely. As a result, an aggregate

table used by numerous teams across the organization will need to have a number of fields renamed, and additional fields will also be added.

Which of the solutions addresses the situation while minimally interrupting other teams in the organization without increasing the number of tables that need to be managed?

- A . Send all users notice that the schema for the table will be changing; include in the communication the logic necessary to revert the new table schema to match historic queries.

- B . Configure a new table with all the requisite fields and new names and use this as the source for the customer-facing application; create a view that maintains the original data schema and table name by aliasing select fields from the new table.

- C . Create a new table with the required schema and new fields and use Delta Lake’s deep clone functionality to sync up changes committed to one table to the corresponding table.

- D . Replace the current table definition with a logical view defined with the query logic currently writing the aggregate table; create a new table to power the customer-facing application.

- E . Add a table comment warning all users that the table schema and field names will be changing on a given date; overwrite the table in place to the specifications of the customer-facing application.

A Delta Lake table representing metadata about content posts from users has the following schema:

user_id LONG, post_text STRING, post_id STRING, longitude FLOAT, latitude FLOAT, post_time TIMESTAMP, date DATE

This table is partitioned by the date column. A query is run with the following filter:

longitude < 20 & longitude > -20

Which statement describes how data will be filtered?

- A . Statistics in the Delta Log will be used to identify partitions that might Include files in the filtered

range. - B . No file skipping will occur because the optimizer does not know the relationship between the partition column and the longitude.

- C . The Delta Engine will use row-level statistics in the transaction log to identify the flies that meet the filter criteria.

- D . Statistics in the Delta Log will be used to identify data files that might include records in the filtered range.

- E . The Delta Engine will scan the parquet file footers to identify each row that meets the filter criteria.

A small company based in the United States has recently contracted a consulting firm in India to implement several new data engineering pipelines to power artificial intelligence applications. All the company’s data is stored in regional cloud storage in the United States.

The workspace administrator at the company is uncertain about where the Databricks workspace used by the contractors should be deployed.

Assuming that all data governance considerations are accounted for, which statement accurately informs this decision?

- A . Databricks runs HDFS on cloud volume storage; as such, cloud virtual machines must be deployed in the region where the data is stored.

- B . Databricks workspaces do not rely on any regional infrastructure; as such, the decision should be made based upon what is most convenient for the workspace administrator.

- C . Cross-region reads and writes can incur significant costs and latency; whenever possible, compute should be deployed in the same region the data is stored.

- D . Databricks leverages user workstations as the driver during interactive development; as such, users should always use a workspace deployed in a region they are physically near.

- E . Databricks notebooks send all executable code from the user’s browser to virtual machines over the open internet; whenever possible, choosing a workspace region near the end users is the most secure.

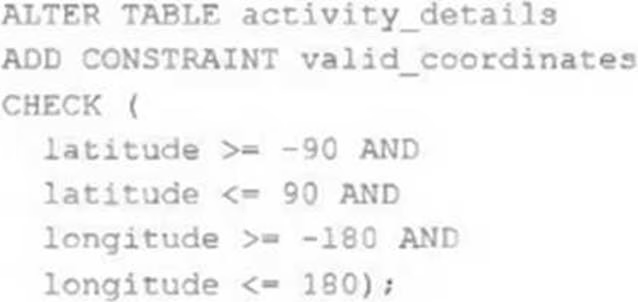

The downstream consumers of a Delta Lake table have been complaining about data quality issues impacting performance in their applications. Specifically, they have complained that invalid latitude and longitude values in the activity_details table have been breaking their ability to use other geolocation processes.

A junior engineer has written the following code to add CHECK constraints to the Delta Lake table:

A senior engineer has confirmed the above logic is correct and the valid ranges for latitude and longitude are provided, but the code fails when executed.

Which statement explains the cause of this failure?

- A . Because another team uses this table to support a frequently running application, two-phase locking is preventing the operation from committing.

- B . The activity details table already exists; CHECK constraints can only be added during initial table creation.

- C . The activity details table already contains records that violate the constraints; all existing data must pass CHECK constraints in order to add them to an existing table.

- D . The activity details table already contains records; CHECK constraints can only be added prior to inserting values into a table.

- E . The current table schema does not contain the field valid coordinates; schema evolution will need to be enabled before altering the table to add a constraint.

Which of the following is true of Delta Lake and the Lakehouse?

- A . Because Parquet compresses data row by row. strings will only be compressed when a character is repeated multiple times.

- B . Delta Lake automatically collects statistics on the first 32 columns of each table which are leveraged in data skipping based on query filters.

- C . Views in the Lakehouse maintain a valid cache of the most recent versions of source tables at all times.

- D . Primary and foreign key constraints can be leveraged to ensure duplicate values are never entered into a dimension table.

- E . Z-order can only be applied to numeric values stored in Delta Lake tables

Latest Databricks Certified Professional Data Engineer Dumps Valid Version with 222 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund