Databricks Databricks Certified Data Engineer Professional Databricks Certified Data Engineer Professional Exam Online Training

Databricks Databricks Certified Data Engineer Professional Online Training

The questions for Databricks Certified Data Engineer Professional were last updated at Feb 21,2026.

- Exam Code: Databricks Certified Data Engineer Professional

- Exam Name: Databricks Certified Data Engineer Professional Exam

- Certification Provider: Databricks

- Latest update: Feb 21,2026

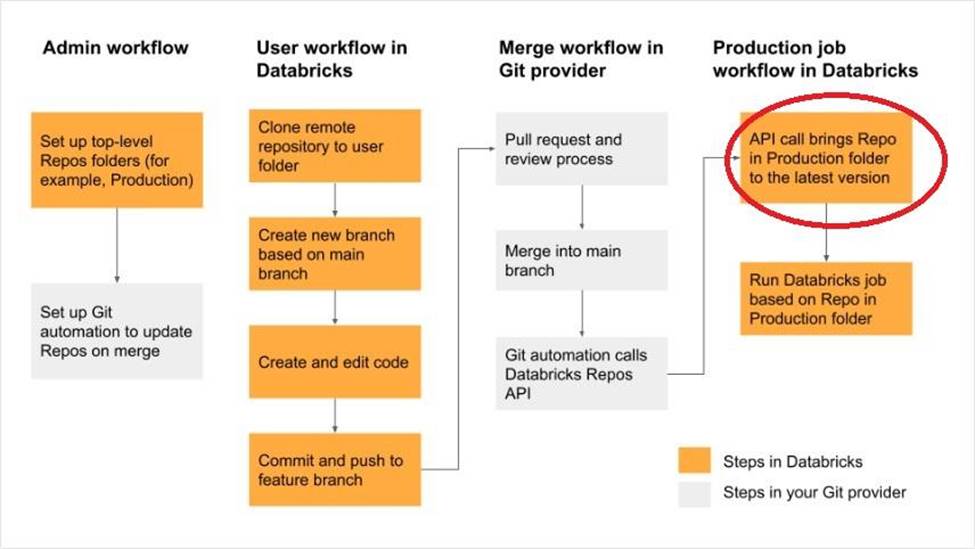

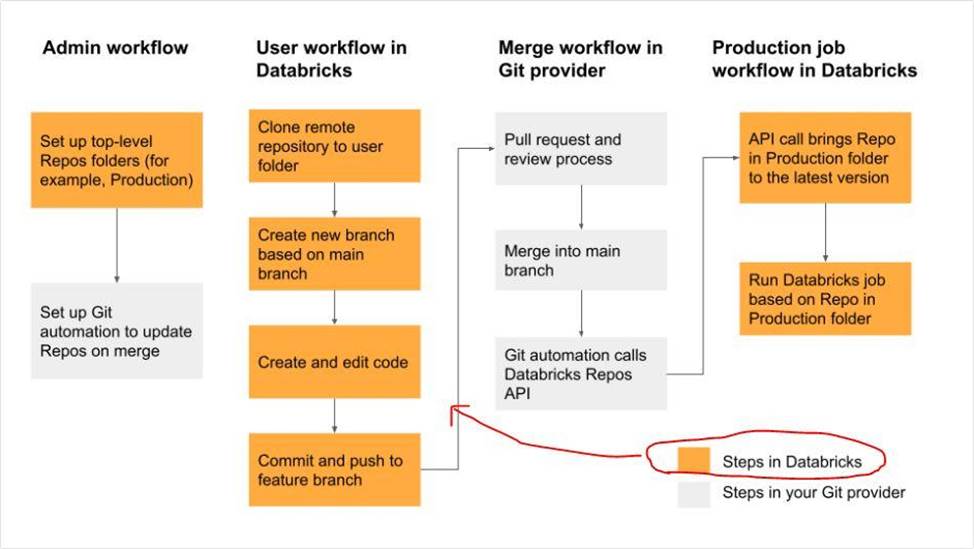

Which of the following developer operations in CI/CD flow can be implemented in Databricks Re-pos?

- A . Merge when code is committed

- B . Pull request and review process

- C . Trigger Databricks Repos API to pull the latest version of code into production folder

- D . Resolve merge conflicts

- E . Delete a branch

Identify one of the below statements that can query a delta table in PySpark Dataframe API

- A . Spark.read.mode("delta").table("table_name")

- B . Spark.read.table.delta("table_name")

- C . Spark.read.table("table_name")

- D . Spark.read.format("delta").LoadTableAs("table_name")

- E . Spark.read.format("delta").TableAs("table_name")

How VACCUM and OPTIMIZE commands can be used to manage the DELTA lake?

- A . VACCUM command can be used to compact small parquet files, and the OP-TIMZE command can be used to delete parquet files that are marked for dele-tion/unused.

- B . VACCUM command can be used to delete empty/blank parquet files in a delta table.

OPTIMIZE command can be used to update stale statistics on a delta table. - C . VACCUM command can be used to compress the parquet files to reduce the size of the table, OPTIMIZE command can be used to cache frequently delta tables for better performance.

- D . VACCUM command can be used to delete empty/blank parquet files in a delta table, OPTIMIZE command can be used to cache frequently delta tables for better performance.

- E . OPTIMIZE command can be used to compact small parquet files, and the VAC-CUM command can be used to delete parquet files that are marked for deletion/unused. (Correct)

Which of the following statements are correct on how Delta Lake implements a lake house?

- A . Delta lake uses a proprietary format to write data, optimized for cloud storage

- B . Using Apache Hadoop on cloud object storage

- C . Delta lake always stores meta data in memory vs storage

- D . Delta lake uses open source, open format, optimized cloud storage and scalable meta data

- E . Delta lake stores data and meta data in computes memory

What are the different ways you can schedule a job in Databricks workspace?

- A . Continuous, Incremental

- B . On-Demand runs, File notification from Cloud object storage

- C . Cron, On Demand runs

- D . Cron, File notification from Cloud object storage

- E . Once, Continuous

Which of the following type of tasks cannot setup through a job?

- A . Notebook

- B . DELTA LIVE PIPELINE

- C . Spark Submit

- D . Python

- E . Databricks SQL Dashboard refresh

Which of the following describes how Databricks Repos can help facilitate CI/CD workflows on the Databricks Lakehouse Platform?

- A . Databricks Repos can facilitate the pull request, review, and approval process before merging branches

- B . Databricks Repos can merge changes from a secondary Git branch into a main Git branch

- C . Databricks Repos can be used to design, develop, and trigger Git automation pipelines

- D . Databricks Repos can store the single-source-of-truth Git repository

- E . Databricks Repos can commit or push code changes to trigger a CI/CD process

Which of the following describes how Databricks Repos can help facilitate CI/CD workflows on the Databricks Lakehouse Platform?

- A . Databricks Repos can facilitate the pull request, review, and approval process before merging branches

- B . Databricks Repos can merge changes from a secondary Git branch into a main Git branch

- C . Databricks Repos can be used to design, develop, and trigger Git automation pipelines

- D . Databricks Repos can store the single-source-of-truth Git repository

- E . Databricks Repos can commit or push code changes to trigger a CI/CD process

Which of the following describes how Databricks Repos can help facilitate CI/CD workflows on the Databricks Lakehouse Platform?

- A . Databricks Repos can facilitate the pull request, review, and approval process before merging branches

- B . Databricks Repos can merge changes from a secondary Git branch into a main Git branch

- C . Databricks Repos can be used to design, develop, and trigger Git automation pipelines

- D . Databricks Repos can store the single-source-of-truth Git repository

- E . Databricks Repos can commit or push code changes to trigger a CI/CD process

Which of the following describes how Databricks Repos can help facilitate CI/CD workflows on the Databricks Lakehouse Platform?

- A . Databricks Repos can facilitate the pull request, review, and approval process before merging branches

- B . Databricks Repos can merge changes from a secondary Git branch into a main Git branch

- C . Databricks Repos can be used to design, develop, and trigger Git automation pipelines

- D . Databricks Repos can store the single-source-of-truth Git repository

- E . Databricks Repos can commit or push code changes to trigger a CI/CD process

Latest Databricks Certified Data Engineer Professional Dumps Valid Version with 278 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund