Databricks Databricks Certified Data Engineer Professional Databricks Certified Data Engineer Professional Exam Online Training

Databricks Databricks Certified Data Engineer Professional Online Training

The questions for Databricks Certified Data Engineer Professional were last updated at Feb 23,2026.

- Exam Code: Databricks Certified Data Engineer Professional

- Exam Name: Databricks Certified Data Engineer Professional Exam

- Certification Provider: Databricks

- Latest update: Feb 23,2026

table(table_name))

- A . format, checkpointlocation, schemalocation, overwrite

- B . cloudfiles.format, checkpointlocation, cloudfiles.schemalocation, overwrite

- C . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, mergeSchema

- D . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, overwrite

- E . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, append

table(table_name))

- A . format, checkpointlocation, schemalocation, overwrite

- B . cloudfiles.format, checkpointlocation, cloudfiles.schemalocation, overwrite

- C . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, mergeSchema

- D . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, overwrite

- E . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, append

table(table_name))

- A . format, checkpointlocation, schemalocation, overwrite

- B . cloudfiles.format, checkpointlocation, cloudfiles.schemalocation, overwrite

- C . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, mergeSchema

- D . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, overwrite

- E . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, append

table(table_name))

- A . format, checkpointlocation, schemalocation, overwrite

- B . cloudfiles.format, checkpointlocation, cloudfiles.schemalocation, overwrite

- C . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, mergeSchema

- D . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, overwrite

- E . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, append

table(table_name))

- A . format, checkpointlocation, schemalocation, overwrite

- B . cloudfiles.format, checkpointlocation, cloudfiles.schemalocation, overwrite

- C . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, mergeSchema

- D . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, overwrite

- E . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, append

table(table_name))

- A . format, checkpointlocation, schemalocation, overwrite

- B . cloudfiles.format, checkpointlocation, cloudfiles.schemalocation, overwrite

- C . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, mergeSchema

- D . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, overwrite

- E . cloudfiles.format, cloudfiles.schemalocation, checkpointlocation, append

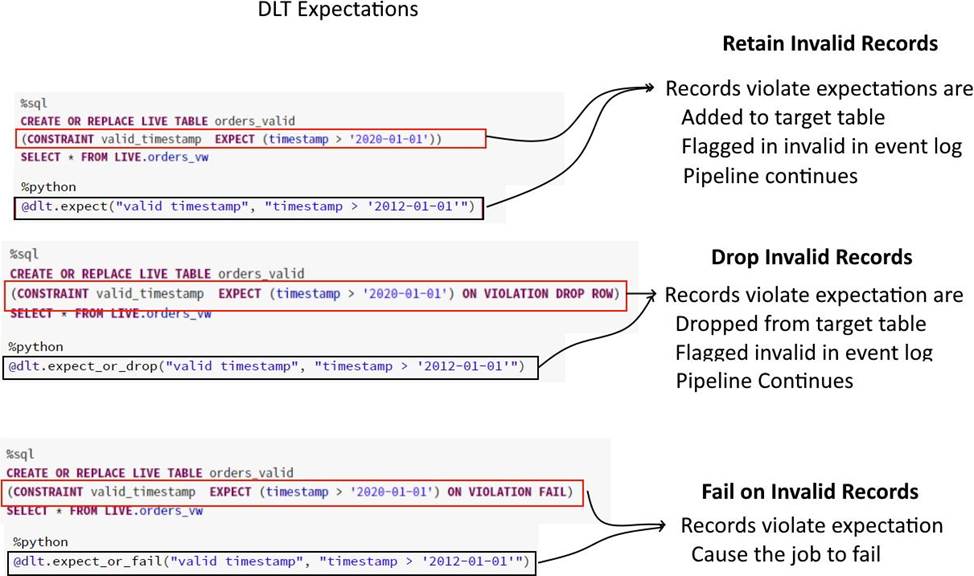

A dataset has been defined using Delta Live Tables and includes an expectations clause: CON-STRAINT valid_timestamp EXPECT (timestamp > ‘2020-01-01’) ON VIOLATION FAIL

What is the expected behavior when a batch of data containing data that violates these constraints is processed?

- A . Records that violate the expectation are added to the target dataset and recorded as invalid in the event log.

- B . Records that violate the expectation are dropped from the target dataset and recorded as invalid in the event log.

- C . Records that violate the expectation cause the job to fail

- D . Records that violate the expectation are added to the target dataset and flagged as in-valid in a field added to the target dataset.

- E . Records that violate the expectation are dropped from the target dataset and loaded into a quarantine table.

The current ELT pipeline is receiving data from the operations team once a day so you had setup an AUTO LOADER process to run once a day using trigger (Once = True) and scheduled a job to run once a day, operations team recently rolled out a new feature that allows them to send data every 1 min, what changes do you need to make to AUTO LOADER to process the data every 1 min.

- A . Convert AUTO LOADER to structured streaming

- B . Change AUTO LOADER trigger to .trigger(ProcessingTime = "1 minute")

- C . Setup a job cluster run the notebook once a minute

- D . Enable stream processing

- E . Change AUTO LOADER trigger to ("1 minute")

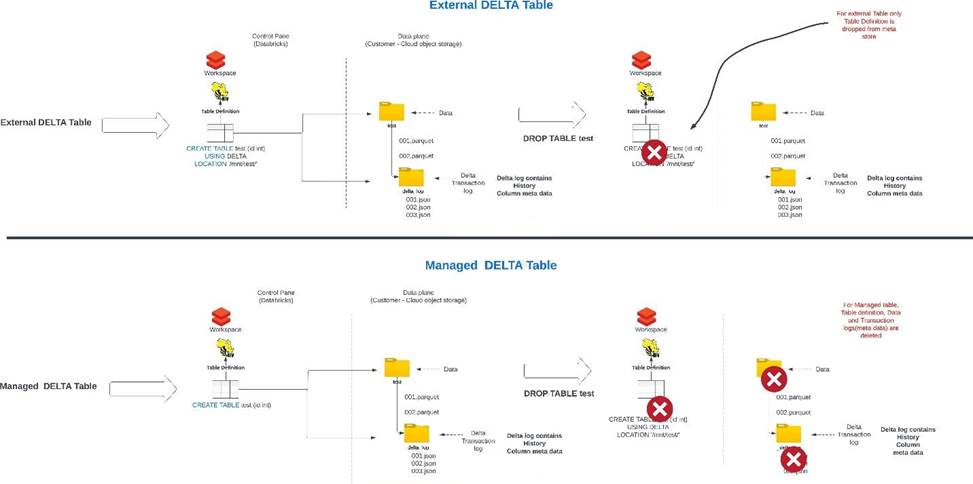

When you drop an external DELTA table using the SQL Command DROP TABLE table_name, how does it impact metadata (delta log, history), and data stored in the storage?

- A . Drops table from metastore, metadata (delta log, history) and data in storage

- B . Drops table from metastore, data but keeps metadata (delta log, history) in storage

- C . Drops table from metastore, metadata (delta log, history) but keeps the data in storage

- D . Drops table from metastore, but keeps metadata (delta log, history) and data in storage

- E . Drops table from metastore and data in storage but keeps metadata (delta log, history)

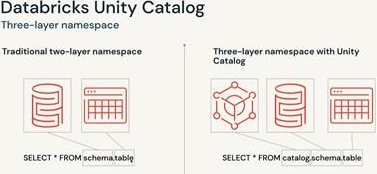

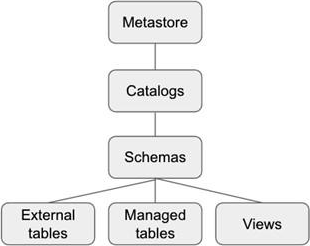

How do you access or use tables in the unity catalog?

- A . schema_name.table_name

- B . schema_name.catalog_name.table_name

- C . catalog_name.table_name

- D . catalog_name.database_name.schema_name.table_name

- E . catalog_name.schema_name.table_name

Latest Databricks Certified Data Engineer Professional Dumps Valid Version with 278 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund