Databricks Databricks Certified Data Engineer Professional Databricks Certified Data Engineer Professional Exam Online Training

Databricks Databricks Certified Data Engineer Professional Online Training

The questions for Databricks Certified Data Engineer Professional were last updated at Feb 24,2026.

- Exam Code: Databricks Certified Data Engineer Professional

- Exam Name: Databricks Certified Data Engineer Professional Exam

- Certification Provider: Databricks

- Latest update: Feb 24,2026

What is the purpose of a gold layer in Multi-hop architecture?

- A . Optimizes ETL throughput and analytic query performance

- B . Eliminate duplicate records

- C . Preserves grain of original data, without any aggregations

- D . Data quality checks and schema enforcement

- E . Powers ML applications, reporting, dashboards and adhoc reports.

What is the purpose of a gold layer in Multi-hop architecture?

- A . Optimizes ETL throughput and analytic query performance

- B . Eliminate duplicate records

- C . Preserves grain of original data, without any aggregations

- D . Data quality checks and schema enforcement

- E . Powers ML applications, reporting, dashboards and adhoc reports.

What is the purpose of a gold layer in Multi-hop architecture?

- A . Optimizes ETL throughput and analytic query performance

- B . Eliminate duplicate records

- C . Preserves grain of original data, without any aggregations

- D . Data quality checks and schema enforcement

- E . Powers ML applications, reporting, dashboards and adhoc reports.

Which of the following programming languages can be used to build a Databricks SQL dashboard?

- A . Python

- B . Scala

- C . SQL

- D . R

- E . All of the above

You are working on a table called orders which contains data for 2021 and you have the second table called orders_archive which contains data for 2020, you need to combine the data from two tables and there could be a possibility of the same rows between both the tables, you are looking to combine the results from both the tables and eliminate the duplicate rows, which of the following SQL statements helps you accomplish this?

- A . SELECT * FROM orders UNION SELECT * FROM orders_archive (Correct)

- B . SELECT * FROM orders INTERSECT SELECT * FROM orders_archive

- C . SELECT * FROM orders UNION ALL SELECT * FROM orders_archive

- D . SELECT * FROM orders_archive MINUS SELECT * FROM orders

- E . SELECT distinct * FROM orders JOIN orders_archive on order.id = or-ders_archive.id

Once a cluster is deleted, below additional actions need to performed by the administrator

- A . Remove virtual machines but storage and networking are automatically dropped

- B . Drop storage disks but Virtual machines and networking are automatically dropped

- C . Remove networking but Virtual machines and storage disks are automatically dropped

- D . Remove logs

- E . No action needs to be performed. All resources are automatically removed.

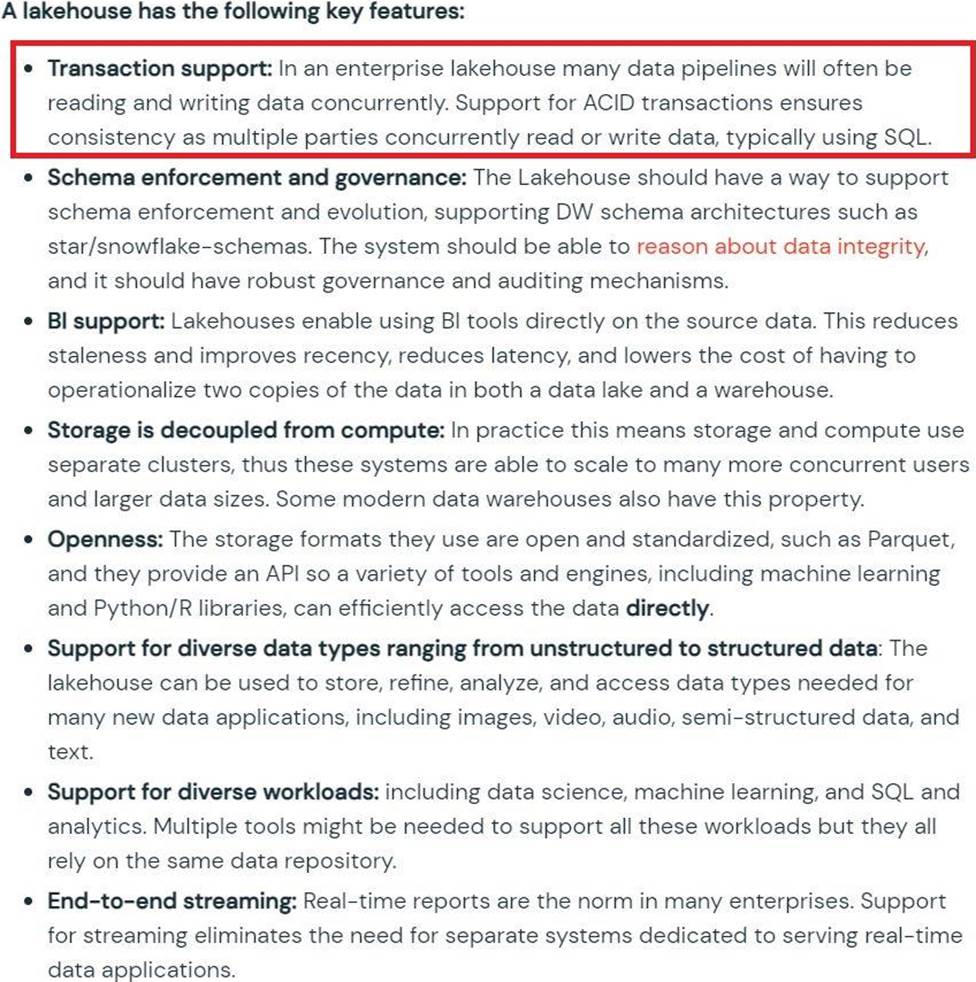

Which of the statements are correct about lakehouse?

- A . Lakehouse only supports Machine learning workloads and Data warehouses support BI workloads

- B . Lakehouse only supports end-to-end streaming workloads and Data warehouses support Batch workloads

- C . Lakehouse does not support ACID

- D . In Lakehouse Storage and compute are coupled

- E . Lakehouse supports schema enforcement and evolution

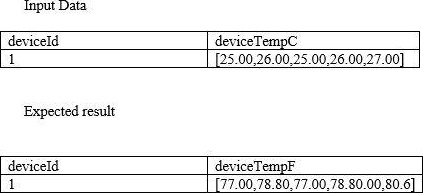

You are working on IOT data where each device has 5 reading in an array collected in Celsius, you were asked to covert each individual reading from Celsius to Fahrenheit, fill in the blank with an appropriate function that can be used in this scenario.

Schema: deviceId INT, deviceTemp ARRAY<double>

SELECT deviceId, __(deviceTempC,i-> (i * 9/5) + 32) as deviceTempF

FROM sensors

- A . APPLY

- B . MULTIPLY

- C . ARRAYEXPR

- D . TRANSFORM

- E . FORALL

What is the type of table created when you issue SQL DDL command CREATE TABLE sales (id int, units int)

- A . Query fails due to missing location

- B . Query fails due to missing format

- C . Managed Delta table

- D . External Table

- E . Managed Parquet table

You are currently working on a production job failure with a job set up in job clusters due to a data issue, what cluster do you need to start to investigate and analyze the data?

- A . A Job cluster can be used to analyze the problem

- B . All-purpose cluster/ interactive cluster is the recommended way to run commands and view the data.

- C . Existing job cluster can be used to investigate the issue

- D . Databricks SQL Endpoint can be used to investigate the issue

Latest Databricks Certified Data Engineer Professional Dumps Valid Version with 278 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund