Databricks Databricks Certified Data Engineer Associate Databricks Certified Data Engineer Associate Exam Online Training

Databricks Databricks Certified Data Engineer Associate Online Training

The questions for Databricks Certified Data Engineer Associate were last updated at Feb 24,2026.

- Exam Code: Databricks Certified Data Engineer Associate

- Exam Name: Databricks Certified Data Engineer Associate Exam

- Certification Provider: Databricks

- Latest update: Feb 24,2026

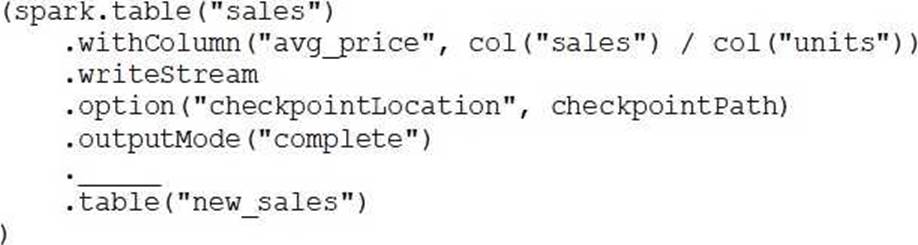

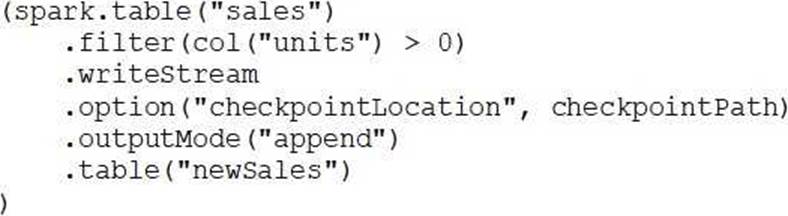

A data engineer has configured a Structured Streaming job to read from a table, manipulate the data, and then perform a streaming write into a new table.

The cade block used by the data engineer is below:

If the data engineer only wants the query to execute a micro-batch to process data every 5 seconds, which of the following lines of code should the data engineer use to fill in the blank?

- A . trigger("5 seconds")

- B . trigger()

- C . trigger(once="5 seconds")

- D . trigger(processingTime="5 seconds")

- E . trigger(continuous="5 seconds")

A dataset has been defined using Delta Live Tables and includes an expectations clause:

CONSTRAINT valid_timestamp EXPECT (timestamp > ‘2020-01-01’) ON VIOLATION DROP ROW

What is the expected behavior when a batch of data containing data that violates these constraints is

processed?

- A . Records that violate the expectation are dropped from the target dataset and loaded into a quarantine table.

- B . Records that violate the expectation are added to the target dataset and flagged as invalid in a field added to the target dataset.

- C . Records that violate the expectation are dropped from the target dataset and recorded as invalid in the event log.

- D . Records that violate the expectation are added to the target dataset and recorded as invalid in the event log.

- E . Records that violate the expectation cause the job to fail.

Which of the following describes when to use the CREATE STREAMING LIVE TABLE (formerly CREATE INCREMENTAL LIVE TABLE) syntax over the CREATE LIVE TABLE syntax when creating Delta Live Tables (DLT) tables using SQL?

- A . CREATE STREAMING LIVE TABLE should be used when the subsequent step in the DLT pipeline is static.

- B . CREATE STREAMING LIVE TABLE should be used when data needs to be processed incrementally.

- C . CREATE STREAMING LIVE TABLE is redundant for DLT and it does not need to be used.

- D . CREATE STREAMING LIVE TABLE should be used when data needs to be processed through complicated aggregations.

- E . CREATE STREAMING LIVE TABLE should be used when the previous step in the DLT pipeline is static.

A data engineer is designing a data pipeline. The source system generates files in a shared directory that is also used by other processes. As a result, the files should be kept as is and will accumulate in the directory. The data engineer needs to identify which files are new since the previous run in the pipeline, and set up the pipeline to only ingest those new files with each run.

Which of the following tools can the data engineer use to solve this problem?

- A . Unity Catalog

- B . Delta Lake

- C . Databricks SQL

- D . Data Explorer

- E . Auto Loader

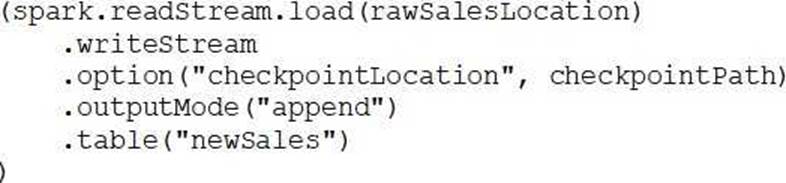

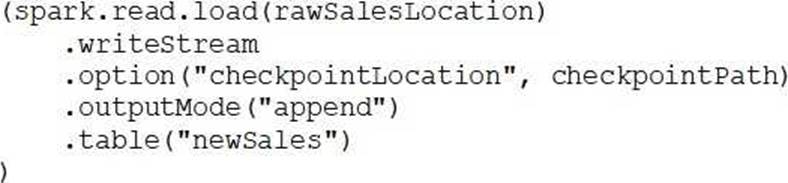

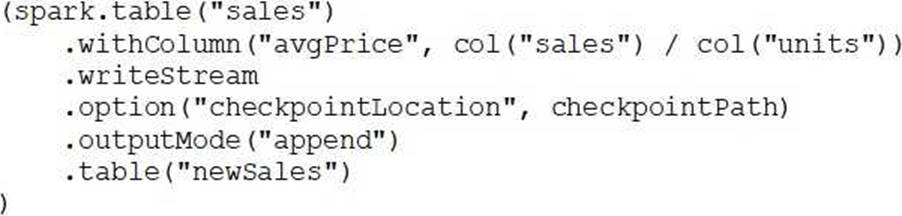

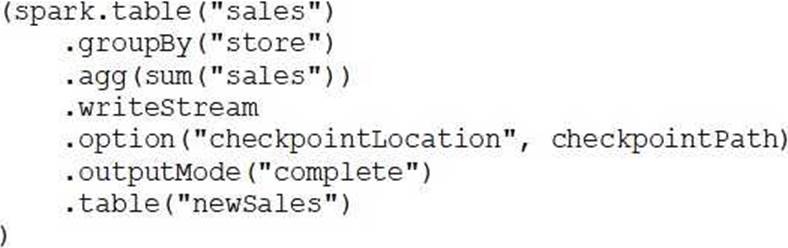

Which of the following Structured Streaming queries is performing a hop from a Silver table to a Gold table?

A)

B)

C)

D)

E)

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

A data engineer has three tables in a Delta Live Tables (DLT) pipeline. They have configured the pipeline to drop invalid records at each table. They notice that some data is being dropped due to quality concerns at some point in the DLT pipeline. They would like to determine at which table in their pipeline the data is being dropped.

Which of the following approaches can the data engineer take to identify the table that is dropping the records?

- A . They can set up separate expectations for each table when developing their DLT pipeline.

- B . They cannot determine which table is dropping the records.

- C . They can set up DLT to notify them via email when records are dropped.

- D . They can navigate to the DLT pipeline page, click on each table, and view the data quality statistics.

- E . They can navigate to the DLT pipeline page, click on the “Error” button, and review the present errors.

A data engineer has a single-task Job that runs each morning before they begin working. After identifying an upstream data issue, they need to set up another task to run a new notebook prior to the original task.

Which of the following approaches can the data engineer use to set up the new task?

- A . They can clone the existing task in the existing Job and update it to run the new notebook.

- B . They can create a new task in the existing Job and then add it as a dependency of the original task.

- C . They can create a new task in the existing Job and then add the original task as a dependency of the new task.

- D . They can create a new job from scratch and add both tasks to run concurrently.

- E . They can clone the existing task to a new Job and then edit it to run the new notebook.

An engineering manager wants to monitor the performance of a recent project using a Databricks SQL query. For the first week following the project’s release, the manager wants the query results to be updated every minute. However, the manager is concerned that the compute resources used for the query will be left running and cost the organization a lot of money beyond the first week of the project’s release.

Which of the following approaches can the engineering team use to ensure the query does not cost the organization any money beyond the first week of the project’s release?

- A . They can set a limit to the number of DBUs that are consumed by the SQL Endpoint.

- B . They can set the query’s refresh schedule to end after a certain number of refreshes.

- C . They cannot ensure the query does not cost the organization money beyond the first week of the project’s release.

- D . They can set a limit to the number of individuals that are able to manage the query’s refresh schedule.

- E . They can set the query’s refresh schedule to end on a certain date in the query scheduler.

A data analysis team has noticed that their Databricks SQL queries are running too slowly when connected to their always-on SQL endpoint. They claim that this issue is present when many members of the team are running small queries simultaneously. They ask the data engineering team for help. The data engineering team notices that each of the team’s queries uses the same SQL endpoint.

Which of the following approaches can the data engineering team use to improve the latency of the team’s queries?

- A . They can increase the cluster size of the SQL endpoint.

- B . They can increase the maximum bound of the SQL endpoint’s scaling range.

- C . They can turn on the Auto Stop feature for the SQL endpoint.

- D . They can turn on the Serverless feature for the SQL endpoint.

- E . They can turn on the Serverless feature for the SQL endpoint and change the Spot Instance Policy to “Reliability Optimized.”

A data engineer wants to schedule their Databricks SQL dashboard to refresh once per day, but they only want the associated SQL endpoint to be running when it is necessary.

Which of the following approaches can the data engineer use to minimize the total running time of the SQL endpoint used in the refresh schedule of their dashboard?

- A . They can ensure the dashboard’s SQL endpoint matches each of the queries’ SQL endpoints.

- B . They can set up the dashboard’s SQL endpoint to be serverless.

- C . They can turn on the Auto Stop feature for the SQL endpoint.

- D . They can reduce the cluster size of the SQL endpoint.

- E . They can ensure the dashboard’s SQL endpoint is not one of the included query’s SQL endpoint.

Latest Databricks Certified Data Engineer Associate Dumps Valid Version with 87 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund